Understanding Linear Algebra, Eigenvectors, and More in Neural Networks and Image processing”

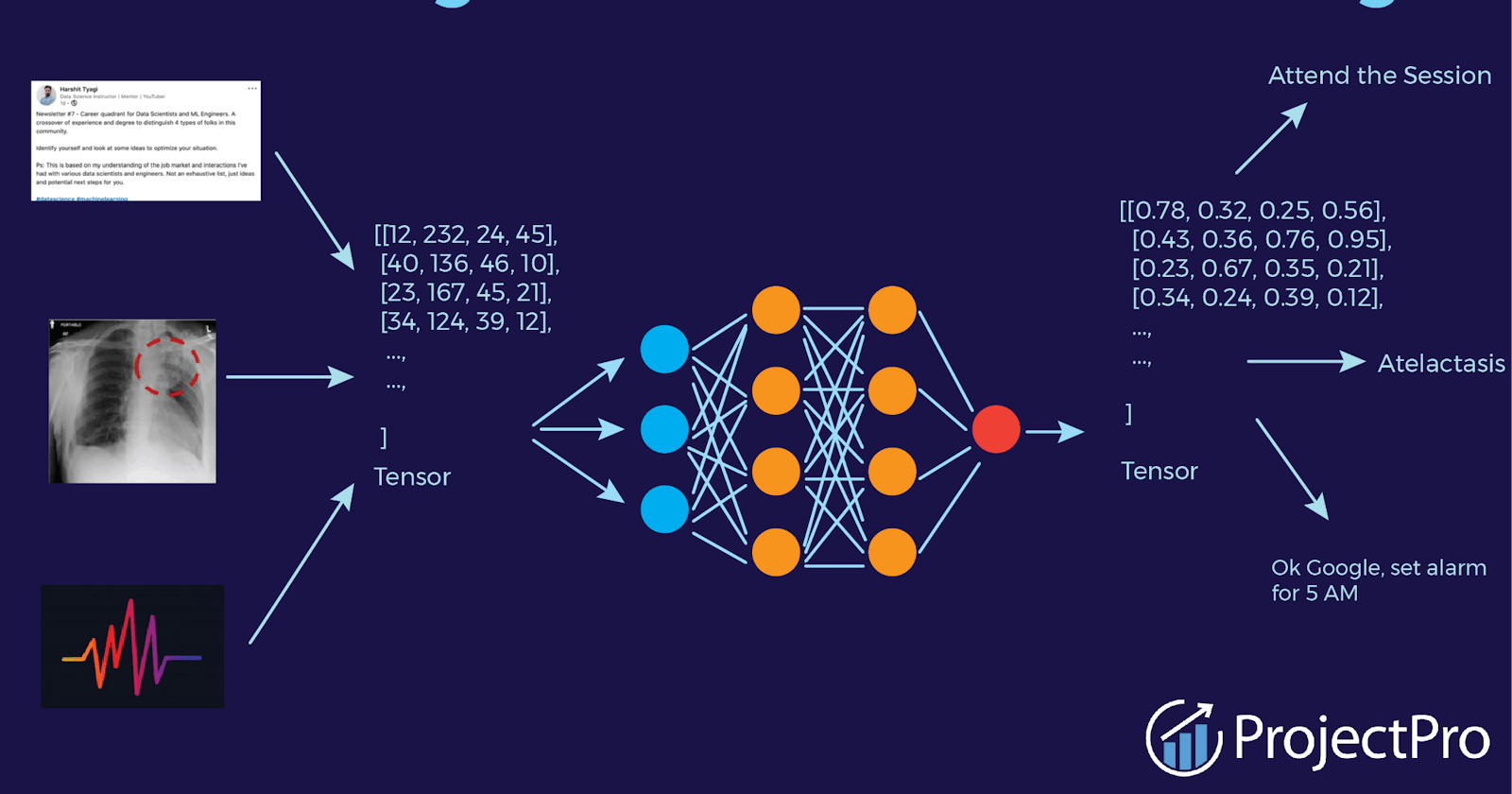

Linear algebra is a fundamental mathematical concept that underlies many operations in computer vision and machine learning. In the context of neural networks and image processing, linear algebra provides a way to represent and manipulate arrays of numbers (often called matrices) in a compact and efficient manner.

Eigenvectors and eigenvalues are key concepts in linear algebra that are used in many algorithms for image processing and computer vision. An eigenvector is a non-zero vector that, when multiplied by a matrix, results in a scalar multiple of that vector. The scalar is called an eigenvalue. Eigenvectors and eigenvalues can be used to find the dominant directions in data and to perform dimensionality reduction, among other tasks.

Covariance matrices are a type of matrix that are used to represent the relationship between two or more variables. In image processing, covariance matrices can be used to capture the relationships between different color channels, for example. They can also be used in neural networks to represent the correlations between different features in the input data.

Linear independence and dependence are concepts that describe the relationship between a set of vectors. Vectors are linearly independent if they are not scalar multiples of each other, while they are linearly dependent if they can be expressed as a linear combination of each other. In the context of neural networks and image processing, linear independence and dependence can be used to determine the number of degrees of freedom in a system, which can help to prevent overfitting and improve the accuracy of predictions.

Let’s say you have a bunch of toy blocks of different colors, and you want to understand how the colors are related to each other. That’s where linear algebra comes in. It’s like a tool that helps you organize and make sense of the information about your toy blocks.

Next, eigenvectors and eigenvalues are like secret directions and secret strengths in the way the colors of your toy blocks are related. They can help you understand which direction is the most important and which direction is the strongest.

Covariance is a fancy way of saying how much two things are related to each other. So, for your toy blocks, it would tell you how much the colors of one block are related to the colors of another block.

Linear independence and dependence are like best friends and copying. Best friends are independent and unique, while copying is dependent on something else. It’s the same with your toy blocks — some colors might be completely different and not related to each other at all (independent), while some colors might be very similar to each other and look like they were copied from each other (dependent).

All of these ideas help us understand the relationships between different things, and they’re important in helping computers understand images and make predictions. Just like you want to understand your toy blocks, computers want to understand things like pictures and make predictions about what they see.